YouTube’s first three-monthly “enforcement report” has revealed that the video sharing site deleted 8.3 million videos between October and December 2017 for breaching its community guidelines, with machines doing most of the work.

The Google-owned site has come under pressure over the peast year for not doing enough to tackle extreme and offensive contet, resulting in some high-profile brands pulling ads after discovering they were being run alongside extreme content.

To reassure advertisers and deter the interest of regulators, YouTube recently decided to begin posting a quarterly Community Guidelines Enforcement Report highlighting its efforts to purge the site of content that breaches its terms of service.

The first of these reports reveals that the Google-owned company wiped 8.3 million videos from its servers between October and December, 2017. YouTube said the majority of the videos were spam or contained sexual content. Others featured abusive, violent, or terrorist-related material.

The data, which doesn’t include content deleted for copyright or legal reasons, shows that YouTube’s automated tools are now doing most of the work, deleting the majority of the unsuitable videos.

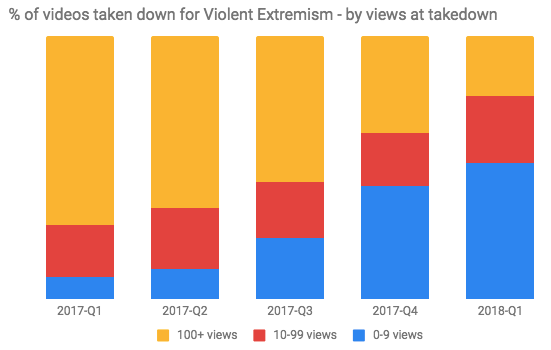

Interestingly, YouTube noted that of the 6.7 million videos pulled up by its machine-based technology, 76 percent were removed before they received a single view.

Sexually explicit videos attracted 9.1 million reports from the website’s users, while 4.7 million were reported for hateful or abusive content.

Most complaints came from India, the US or Brazil.

YouTube said its algorithms had flagged 6.7 million videos that had then been sent to human moderators and deleted.

Of those, 76% had not been watched on YouTube, other than by the moderators.

YouTube will let people track their reports.

The report does not reveal how many inappropriate videos had been reported or removed from YouTube Kids.

YouTube also announced the addition of a “reporting dashboard” to users’ accounts, to let them see the status of any videos they had reported as inappropriate.

Read more here https://youtube.googleblog.com/2018/04/more-information-faster-removals-more.html