Launched back in 2018, Lookout, taps AI to help blind and visually impaired users navigate with auditory cues as they encounter objects, text, and people within range.

Under the revamp, Google’s AI can now identify food in the supermarket, in a move designed to help the visually impaired.

A new update has added the ability for a computer voice to say aloud what food it thinks a person is holding based on its visual appearance. Google says the feature will “be able to distinguish between a can of corn and a can of green beans”.

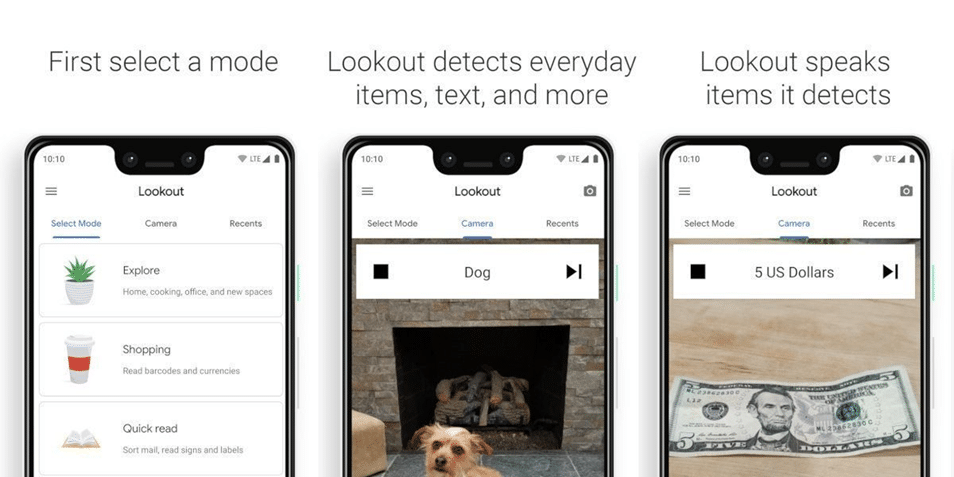

By keeping their smartphone pointed forward with the rear camera unobscured, users can leverage Lookout to detect and identify items in a scene.

The app, for Android phones, has some two million “popular products” in a database it stores on the phone – and this catalogue changes depending on where the user is in the world, a post on Google’s AI blog said.

Lookout uses similar technology to Google Lens, the app that can identify what a smartphone camera is looking at and show the user more information. It already had a mode that would read any text it was pointed at, and an “explore mode” that identifies objects and text.

Lookout was previously only available in the US in English, but today — to mark its global debut and newfound support for any device with 2GB of RAM running Android 6.0 or newer — Google is adding support for four more languages (French, Italian, German, and Spanish) and expanding compatibility from Pixel smartphones to additional devices. The company is also rolling out a new design to simplify the process of switching between different modes.

The redesigned Lookout relegates the mode selector, which was previously fullscreen, to the app’s bottom row. Users can swipe between modes and optionally use a screen reader, such as Google’s own TalkBack, to identify the option they’ve selected.

Lookout also now gives auditory hints like “try rotating the product to the other side” when it can’t spot a barcode, label, or ad off the bat.

“Quick read” is another enhanced Lookout mode. As its name implies, it reads snippets of text from things like envelopes and coupons aloud, even in reverse orientation. A document-reading mode (Scan Document) captures lengthier text and lets users read at their own pace, use a screen-reading app, or manually copy and paste the text into a third-party app.

Launching the app last year, Google recommended placing a smartphone in a front shirt pocket or on a lanyard around the neck so the camera could identify things directly in front of it.

Google also says it has made improvements to the app based on feedback from visually impaired users.